History of Nanotechnology

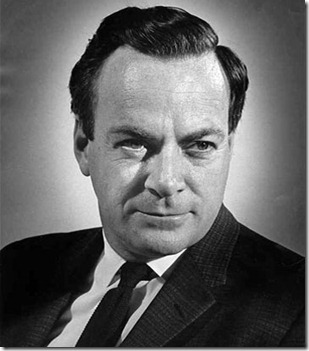

Traditionally, the origins of nanotechnology are traced back to December 29, 1959, when Professor Richard Feynman (a 1965 Nobel Prize winner in physics) presented a lecture entitled “There’s Plenty of Room at the Bottom” during the annual meeting of the American Physical Society at the California Institute of Technology (Caltech). In this talk, Feynman spoke about the principles of miniaturization and atomic-level precision and how these concepts do not violate any known law of physics. Feynman described a process by which the ability to manipulate individual atoms and molecules might be developed, using one set of precise tools to build and operate another proportionally smaller set, and so on down to the needed scale.

He described a field that few researchers had thought much about, let alone investigated. Feynman presented the idea of manipulating and controlling things on an extremely small scale by building and shaping matter one atom at a time. He proposed that it was possible to build a surgical nanoscale robot by developing quarter-scale manipulator hands that would build quarter-scale machine tools analogous to those found in machine shops, continuing until the nanoscale is reached, eight iterations later.

Printed Carbon Nanotube Transistor

Researchers from Aneeve Nanotechnologies have used low-cost ink-jet printing to fabricate the first circuits composed of fully printed back-gated and top-gated carbon nanotube-based electronics for use with OLED displays. OLED-based displays are used in cell phones, digital cameras and other portable devices.

But developing a lower-cost method for mass-producing such displays has been complicated by the difficulties of incorporating thin-film transistors that use amorphous silicon and polysilicon into the production process.

In this innovative study, the team made carbon nanotube thin-film transistors with high mobility and a high on-off ratio, completely based on ink-jet printing. They demonstrated the first fully printed single-pixel OLED control circuits, and their fully printed thin-film circuits showed significant performance advantages over traditional organic-based printed electronics.

This distinct process utilizes an ink-jet printing method that eliminates the need for expensive vacuum equipment and lends itself to scalable manufacturing and roll-to-roll printing. The team solved many material integration problems, developed new cleaning processes and created new methods for negotiating nano-based ink solutions.

For active-matrix OLED applications, the printed carbon nanotube transistors will be fully integrated with OLED arrays, the researchers said. The encapsulation technology developed for OLEDs will also keep the carbon nanotube transistors well protected, as the organics in OLEDs are very sensitive to oxygen and moisture.

(Adapted from PhysOrg)

But developing a lower-cost method for mass-producing such displays has been complicated by the difficulties of incorporating thin-film transistors that use amorphous silicon and polysilicon into the production process.

In this innovative study, the team made carbon nanotube thin-film transistors with high mobility and a high on-off ratio, completely based on ink-jet printing. They demonstrated the first fully printed single-pixel OLED control circuits, and their fully printed thin-film circuits showed significant performance advantages over traditional organic-based printed electronics.

This distinct process utilizes an ink-jet printing method that eliminates the need for expensive vacuum equipment and lends itself to scalable manufacturing and roll-to-roll printing. The team solved many material integration problems, developed new cleaning processes and created new methods for negotiating nano-based ink solutions.

|

| Ink-jet-printed circuit. (Credit: University of California - Los Angeles) |

(Adapted from PhysOrg)

35 Facebook false websites

Security Web-Center found 35 Facebook phishing websites. These spammers create fake pages that look like the Facebook login page. If you enter your email and password on one of these pages, the spammer records your information and keeps it. The fake sites, like the one below, use a similar URL to Facebook.com in an attempt to steal people's login information.

The people behind these websites, then use the information to access victims' accounts and send messages to their friends, further propagating the illegitimate sites. In some instances, the phishers make money by exploiting the personal information they've obtained. Check out the list:

Graphene bubbles improve lithium-air batteries

A team of scientists from the Pacific Northwest National Laboratory and Princeton University used a new approach to buil a graphene membrane for use in lithium-air batteries, which could, one day, replace conventional batteries in electric vehicles. Resembling coral, this porous graphene material could replace the traditional smooth graphene sheets in lithium-air batteries, which become clogged with tiny particles during use.

Resembling broken eggshells, graphene structures built around bubbles produced a lithium-air battery with the highest energy capacity to date. As an added bonus, the team’s new material does not rely on platinum or other precious metals, reducing its potential cost and environmental impact.

New botnets arrive

The recent breakup of the ChangeDNS botnet which infected more than 4 million computers and was under the control of a single ring of criminals raised a new set of concerns. The biggest effect of the commoditization of botnet tools and other computer security exploits might be a new wave of major botnet attacks, driven by people who simply buy their malware from the equivalent of an app store—or who rent it as a service.

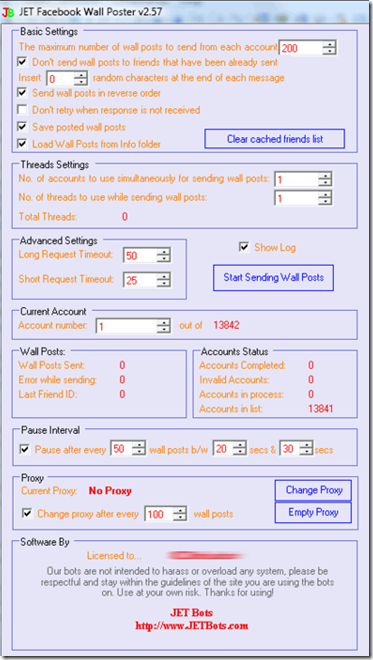

The botnet market is nothing new—it's been evolving for years. But what is new is the business model of botnet developers, which has matured to the point where it begins to resemble other, legitimate software markets. One example of this change is a Facebook and Twitter CAPTCHA bypass bot called JET, which is openly for sale online.

|

| The JET Facebook posting bot from jetbots.com |

Tor Project turns to Amazon

The Tor Project offers a channel for people wanting to route their online communications anonymously and this channel has been used by activists to avoid censorship as well as those seeking anonymity for more nefarious reasons. Now the people involved in this project to maintain a secret layer of the internet have turned to Amazon to add bandwidth to the service. According to some experts, the use of Amazon's cloud service will make it harder for governments to track.

Amazon's cloud service - dubbed EC2 (Elastic Compute Cloud) offers virtual computer capacity. The Tor developers are calling on people to sign up to the service in order to run a bridge - a vital point of the secret network through which communications are routed. According to Tor developers, by setting up a bridge, you donate bandwidth to the Tor network and help improve the safety and speed at which users can access the internet.

World’s lightest material

Ultra light (<10 milligrams per cubic centimeter) cellular materials are desirable for thermal insulation, battery electrodes, catalyst supports, and acoustic, vibration, or shock energy damping. A team of researchers from UC Irvine, HRL Laboratories and the California Institute of Technology have developed the world's lightest material – with a density of 0.9 mg/cc. The new material redefines the limits of lightweight materials because of its unique "micro-lattice" cellular architecture. The researchers were able to make a material that consists of 99.99 percent air by designing the 0.01 percent solid at the nanometer, micron and millimeter scales. "The trick is to fabricate a lattice of interconnected hollow tubes with a wall thickness 1,000 times thinner than a human hair," said lead author Dr. Tobias Schaedler of HRL.

|

| Photo by Dan Little, HDR Laboratories |

The material's architecture allows unprecedented mechanical behavior for a metal, including complete recovery from compression exceeding 50 percent strain and extraordinarily high energy absorption. "Materials actually get stronger as the dimensions are reduced to the nanoscale," explained UCI mechanical and aerospace engineer Lorenzo Valdevit, UCI's principal investigator on the project. "Combine this with the possibility of tailoring the architecture of the micro-lattice and you have a unique cellular material."

William Carter, manager of the architected materials group at HRL, compared the new material to larger, more familiar edifices: "Modern buildings, exemplified by the Eiffel Tower or the Golden Gate Bridge, are incredibly light and weight-efficient by virtue of their architecture. We are revolutionizing lightweight materials by bringing this concept to the nano and micro scales."

Adapted from PhysOrg

Butterfly wings inspire new design

Engineers have been trying to create water repellent surfaces, but past attempts at artificial air traps tended to lose their contents over time due to external perturbations. Now an international team of researchers from Sweden, the United States, and Korea has taken advantage of what might normally be considered defects in the nanomanufacturing process to create a multilayered silicon structure that traps air and holds it for longer than one year.

Researchers mimicked the many-layered nanostructure of blue mountain swallowtail (Papilio ulysses) wings to make a silicon wafer that traps both air and light. The brilliant blue wings of this butterfly easily shed water because of the way ultra-tiny structures in the wings trap air and create a cushion between water and wing.

The researchers used an etching process to carve out micro-scale pores and sculpt tiny cones from the silicon. The team found that features of the resulting structure that might usually be considered defects, such as undercuts beneath the etching mask and scalloped surfaces, actually improved the water repellent properties of the silicon by creating a multilayered hierarchy of air traps. The intricate structure of pores, cones, bumps, and grooves also succeeded in trapping light, almost perfectly absorbing wavelengths just above the visible range.

US military ready for cyber warfare

The US military is now legally in the clear to launch offensive operations in cyberspace, the commander of the US Strategic Command said Wednesday, less than a month after terming this a work in progress.

Air Force General Robert Kehler said in the latest sign of quickening U.S. military preparations for possible cyber warfare that "I do not believe that we need new explicit authorities to conduct offensive operations of any kind". "I do not think there is any issue about authority to conduct operations," he added, referring to the legal framework.

But he said the military was still working its way through cyber warfare rules of engagement that lie beyond "area of hostilities," or battle zones, for which they have been approved.

The US Strategic Command is in charge of a number of areas for the US military, including space operations (like military satellites), cyberspace concerns, 'strategic deterrence' and combating WMDs. The U.S. Cyber Command, a sub-command, began operating in May 2010 as military doctrine, legal authorities and rules of engagement were still being worked out for what the military calls the newest potential battle "domain."

"When warranted, we will respond to hostile acts in cyberspace as we would to any other threat to our country," the DoD said in the report. "All states possess an inherent right to self-defense, and we reserve the right to use all necessary means – diplomatic, informational, military, and economic – to defend our nation, our allies, our partners, and our interests."

The Office of the National Counterintelligence Executive, a U.S. intelligence arm, said in a report to Congress last month that China and Russia are using cyber espionage to steal U.S. trade and technology secrets and that they will remain "aggressive" in these efforts.

It defined cyberspace as including the Internet, telecommunications networks, computer systems and embedded processors and controllers in "critical industries."

The Pentagon, in the report to Congress made public Tuesday, said it was seeking to deter aggression in cyberspace by building stronger defenses and by finding ways to make attackers pay a price.

Windows 8 first malware bootkit

An independent programmer and security analyst, Peter Kleissner, is planning to release the world's first Windows 8 bootkit in India, at the International Malware Conference (MalCon).

A bootkit is a rootkit that is able to load from a master boot record and persist in memory all the way through the transition to protected mode and the startup of the OS. It’s a boot virus that is able to hook and patch Windows to get load into the Windows kernel, and thus getting unrestricted access to the entire computer. It is even able to bypass full volume encryption, because the master boot record (where Stoned is stored) is not encrypted. The master boot record contains the decryption software which asks for a password and decrypts the drive. This is the weak point, the master boot record, which will be used to take over the whole system.

A bootkit is built upon the following broad parts:

- Infector

- Bootkit

- Drivers

- Plugins (the payload)

A bootkit is a rootkit that is able to load from a master boot record and persist in memory all the way through the transition to protected mode and the startup of the OS. It’s a boot virus that is able to hook and patch Windows to get load into the Windows kernel, and thus getting unrestricted access to the entire computer. It is even able to bypass full volume encryption, because the master boot record (where Stoned is stored) is not encrypted. The master boot record contains the decryption software which asks for a password and decrypts the drive. This is the weak point, the master boot record, which will be used to take over the whole system.

Stuxnet 3.0 released at MalCon?

Security researchers were shocked to see in a twitter update from MalCon that one of the research paper submissions shortlisted is on possible features of Stuxnet 3.0. While this may just be a discussion and not a release, it is interesting to note that the speaker Nima Bagheri presenting the paper is from IRAN.

The research paper abstract discusses rootkit features and the malware authors may likely show demonstration at MalCon with new research related to hiding rootkits and advanced Stuxnet like malwares.

Stuxnet is a computer worm discovered in June 2010. It targets Siemens industrial software and equipment running Microsoft Windows. While it is not the first time that hackers have targeted industrial systems, it is the first discovered malware that spies on and subverts industrial systems, and the first to include a programmable logic controller (PLC) rootkit.

What is alarming is the recent discovery (On 1 September 2011) by The Laboratory of Cryptography and System Security (CrySyS) of the Budapest University of Technology and Economics, of a new worm theoretically related to Stuxnet. After analyzing the malware, they named it Duqu and Symantec, based on this report, continued the analysis of the threat, calling it "nearly identical to Stuxnet, but with a completely different purpose", and published a detailed technical paper. The main component used in Duqu is designed to capture information such as keystrokes and system information and this data may be used to enable a future Stuxnet-like attack.

New biosensor made of nanotubes

Standard sensors employ metal electrodes coated with enzymes that react with compounds and produce an electrical signal that can be measured. However,the inefficiency of those sensors leads to imperfect measurements. Now, scientists at Purdue University have developed a new method for stacking synthetic DNA and carbon nanotubes onto a biosensor electrode.

Carbon nanotubes, cylindrically shaped carbon molecules known to have excellent thermal and electrical properties, have been seen as a possibility for improving sensor performance. The problem is that the materials are not fully compatible with water, which limits their application in biological fluids.

Marshall Porterfield and Jong Hyun Choi have found a solution and reported their findings in the journal The Analyst, describing a sensor that essentially builds itself.

"In the future, we will be able to create a DNA sequence that is complementary to the carbon nanotubes and is compatible with specific biosensor enzymes for the many different compounds we want to measure," Porterfield said. "It will be a self-assembling platform for biosensors at the biomolecular level."

Choi developed a synthetic DNA that will attach to the surface of the carbon nanotubes and make them more water-soluble. "Once the carbon nanotubes are in a solution, you only have to place the electrode into the solution and charge it. The carbon nanotubes will then coat the surface," Choi said.

The electrode coated with carbon nanotubes will attract the enzymes to finish the sensor's assembly. The sensor described in the findings was designed for glucose. But Porterfield said it could be easily adapted for various compounds. "You could mass produce these sensors for diabetes, for example, for insulin management for diabetic patients," Porterfield said.

Carbon nanotubes, cylindrically shaped carbon molecules known to have excellent thermal and electrical properties, have been seen as a possibility for improving sensor performance. The problem is that the materials are not fully compatible with water, which limits their application in biological fluids.

Marshall Porterfield and Jong Hyun Choi have found a solution and reported their findings in the journal The Analyst, describing a sensor that essentially builds itself.

"In the future, we will be able to create a DNA sequence that is complementary to the carbon nanotubes and is compatible with specific biosensor enzymes for the many different compounds we want to measure," Porterfield said. "It will be a self-assembling platform for biosensors at the biomolecular level."

Choi developed a synthetic DNA that will attach to the surface of the carbon nanotubes and make them more water-soluble. "Once the carbon nanotubes are in a solution, you only have to place the electrode into the solution and charge it. The carbon nanotubes will then coat the surface," Choi said.

The electrode coated with carbon nanotubes will attract the enzymes to finish the sensor's assembly. The sensor described in the findings was designed for glucose. But Porterfield said it could be easily adapted for various compounds. "You could mass produce these sensors for diabetes, for example, for insulin management for diabetic patients," Porterfield said.

Operation Brotherhood Takedown

Friday, the hacktivist collective known as Anonymous successfully disabled some prominent Egyptian Muslim Brotherhood website. Anonymous is targeting the Muslim Brotherhood in Egypt, claiming the Muslim Brotherhood is a threat to the Egyptian revolution.

Earlier in the week, the hacktivist group had made an announcement to launch a DDoS attack, “Operation Brotherhood Takedown,” on all Brotherhood sites at 8pm on Friday, 11 November and they delivered.

Anonymous announced Saturday that DDoS attacks on the Muslim Brotherhood would continue until November 18.

The Brotherhood claimed in a statement released on Saturday morning that the attacks were coming from Germany, France, Slovakia and San Francisco in the US, with 2000-6000 hits per second. The hackers later escalated their attack on the site to 380 thousand hits per second. Under the overload, four of the group’s websites were forced down temporarily.

Earlier in the week, the hacktivist group had made an announcement to launch a DDoS attack, “Operation Brotherhood Takedown,” on all Brotherhood sites at 8pm on Friday, 11 November and they delivered.

Anonymous announced Saturday that DDoS attacks on the Muslim Brotherhood would continue until November 18.

The Brotherhood claimed in a statement released on Saturday morning that the attacks were coming from Germany, France, Slovakia and San Francisco in the US, with 2000-6000 hits per second. The hackers later escalated their attack on the site to 380 thousand hits per second. Under the overload, four of the group’s websites were forced down temporarily.

FBI's Operation Ghost Click

Resulting from a two-year investigation by the FBI, dubbed "Operation Ghost Click", a gang of internet bandits who stole $14 million after hacking into at least 4 million computers in an online advertising scam have been arrested.

Six Estonian nationals have been arrested and charged with running a sophisticated Internet fraud ring that infected millions of computers worldwide with a virus and enabled the thieves to manipulate the multi-billion-dollar Internet advertising industry. Users of infected machines were unaware that their computers had been compromised—or that the malicious software rendered their machines vulnerable to a host of other viruses.

Computers in more than 100 countries were infected by the “DNSChanger” malware, which redirected searches for Apple’s iTunes store to fake pages pretending to offer Apple software for sale, as well as sending those searching for information on the U.S. Internal Revenue Service to accounting company H&R Block, which allegedly paid those behind the scam a fee for each visitor via a fake internet ad agency. Beginning in 2007, the cyber ring used DNSChanger to infect approximately 4 million computers in more than 100 countries.

Trend Micro, which helped supply information to the FBI on DNS Changer, hailed the law enforcement operation as the "biggest cyber criminal takedown in history." Whilst the rogue DNS servers have been replaced, many may still be infected. Head here to learn about how to check if your system is part of the DNS Changer botnet.

Six Estonian nationals have been arrested and charged with running a sophisticated Internet fraud ring that infected millions of computers worldwide with a virus and enabled the thieves to manipulate the multi-billion-dollar Internet advertising industry. Users of infected machines were unaware that their computers had been compromised—or that the malicious software rendered their machines vulnerable to a host of other viruses.

Computers in more than 100 countries were infected by the “DNSChanger” malware, which redirected searches for Apple’s iTunes store to fake pages pretending to offer Apple software for sale, as well as sending those searching for information on the U.S. Internal Revenue Service to accounting company H&R Block, which allegedly paid those behind the scam a fee for each visitor via a fake internet ad agency. Beginning in 2007, the cyber ring used DNSChanger to infect approximately 4 million computers in more than 100 countries.

Trend Micro, which helped supply information to the FBI on DNS Changer, hailed the law enforcement operation as the "biggest cyber criminal takedown in history." Whilst the rogue DNS servers have been replaced, many may still be infected. Head here to learn about how to check if your system is part of the DNS Changer botnet.

Electron Tweezers

A recent paper by researchers from the National Institute of Standards and Technology (NIST) and the University of Virginia (UVA) demonstrates that the beams produced by modern electron microscopes can be used to manipulate nanoscale objects.

The tool is an electron version of the laser "optical tweezers" that have become a standard tool in biology, physics and chemistry for manipulating tiny particles. Except that electron beams could offer a thousand-fold improvement in sensitivity and resolution.

If you just consider the physics, you might expect that a beam of focused electrons -- such as that created by a transmission electron microscope (TEM) -- could do the same thing. However that's never been seen, in part because electrons are much fussier to work with. They can't penetrate far through air, for example, so electron microscopes use vacuum chambers to hold specimens.

So Vladimir Oleshko and his colleague James Howe, were surprised when, in the course of another experiment, they found themselves watching an electron tweezer at work. They were using an electron microscope to study, in detail, what happens when a metal alloy melts or freezes. They were observing a small particle -- a few hundred microns wide -- of an aluminum-silicon alloy held just at a transition point where it was partially molten, a liquid shell surrounding a core of still solid metal.

"This effect of electron tweezers was unexpected because the general purpose of this experiment was to study melting and crystallization," Oleshko explains. "We can generate this sphere inside the liquid shell easily; you can tell from the image that it's still crystalline. But we saw that when we move or tilt the beam -- or move the microscope stage under the beam -- the solid particle follows it, like it was glued to the beam."

Potentially, electron tweezers could be a versatile and valuable tool, adding very fine manipulation to wide and growing lists of uses for electron microscopy in materials science. "Of course, this is challenging because it requires a vacuum," he says, "but electron probes can be very fine, three orders of magnitude smaller than photon beams -- close to the size of single atoms. We could manipulate very small quantities, even single atoms, in a very precise way."

(Adapted from ScienceDaily)

The tool is an electron version of the laser "optical tweezers" that have become a standard tool in biology, physics and chemistry for manipulating tiny particles. Except that electron beams could offer a thousand-fold improvement in sensitivity and resolution.

If you just consider the physics, you might expect that a beam of focused electrons -- such as that created by a transmission electron microscope (TEM) -- could do the same thing. However that's never been seen, in part because electrons are much fussier to work with. They can't penetrate far through air, for example, so electron microscopes use vacuum chambers to hold specimens.

So Vladimir Oleshko and his colleague James Howe, were surprised when, in the course of another experiment, they found themselves watching an electron tweezer at work. They were using an electron microscope to study, in detail, what happens when a metal alloy melts or freezes. They were observing a small particle -- a few hundred microns wide -- of an aluminum-silicon alloy held just at a transition point where it was partially molten, a liquid shell surrounding a core of still solid metal.

"This effect of electron tweezers was unexpected because the general purpose of this experiment was to study melting and crystallization," Oleshko explains. "We can generate this sphere inside the liquid shell easily; you can tell from the image that it's still crystalline. But we saw that when we move or tilt the beam -- or move the microscope stage under the beam -- the solid particle follows it, like it was glued to the beam."

Potentially, electron tweezers could be a versatile and valuable tool, adding very fine manipulation to wide and growing lists of uses for electron microscopy in materials science. "Of course, this is challenging because it requires a vacuum," he says, "but electron probes can be very fine, three orders of magnitude smaller than photon beams -- close to the size of single atoms. We could manipulate very small quantities, even single atoms, in a very precise way."

(Adapted from ScienceDaily)

Memory at the nanoscale

Metallic alloys can be stretched or compressed in such a way that they stay deformed once the strain on the material has been released. However, shape memory alloys can return to their original shape after being heated above a specific temperature.

Now, for the first time, two physicists from the University of Constance determined the absolute values of temperatures at which shape memory nanospheres start changing back to their memorized shape, undergoing the so-called structural phase transition, which depends on the size of particles studied. To achieve this result, they performed a computer simulation using nanoparticles with diameters between 4 and 17 nm made of an alloy of equal proportions of nickel and titanium.

To date, research efforts to establish structural phase transition temperature have mainly been experimental. Most of the prior work on shape memory materials was in macroscopic scale systems and used for applications such as dental braces, stents or oil temperature-regulating devices for bullet trains.

Thanks to a computerized method known as molecular dynamics simulation, Daniel Mutter and Peter Nielaba were able to visualize the transformation process of the material during the transition. As the temperature increased, they showed that the material's atomic-scale crystal structure shifted from a lower to a higher level of symmetry. They found that the strong influence of the energy difference between the low- and high-symmetry structure at the surface of the nanoparticle, which differed from that in its interior, could explain the transition.

Potential new applications include the creation of nanoswitches, where laser irradiation could heat up such shape memory material, triggering a change in its length that would, in turn, function as a switch.

Now, for the first time, two physicists from the University of Constance determined the absolute values of temperatures at which shape memory nanospheres start changing back to their memorized shape, undergoing the so-called structural phase transition, which depends on the size of particles studied. To achieve this result, they performed a computer simulation using nanoparticles with diameters between 4 and 17 nm made of an alloy of equal proportions of nickel and titanium.

To date, research efforts to establish structural phase transition temperature have mainly been experimental. Most of the prior work on shape memory materials was in macroscopic scale systems and used for applications such as dental braces, stents or oil temperature-regulating devices for bullet trains.

Thanks to a computerized method known as molecular dynamics simulation, Daniel Mutter and Peter Nielaba were able to visualize the transformation process of the material during the transition. As the temperature increased, they showed that the material's atomic-scale crystal structure shifted from a lower to a higher level of symmetry. They found that the strong influence of the energy difference between the low- and high-symmetry structure at the surface of the nanoparticle, which differed from that in its interior, could explain the transition.

Potential new applications include the creation of nanoswitches, where laser irradiation could heat up such shape memory material, triggering a change in its length that would, in turn, function as a switch.

Operation Muslim Brotherhood

Anonymous targets Muslim Brotherhood in Egypt claiming this organization is a threat to the Egyptian revolution and plans a coordinated Distributed Denial of Service attack on Nov. 11.

Monday, those claiming to represent the international hacktivist collective released a YouTube video announcing an operation directed at the Muslim Brotherhood. According to the announcement, the Muslim Brotherhood is a “corrupt” organization “bent on taking over sovereign Arab states in its quest to seize power.” The announcement goes on to compare the Muslim Brotherhood to the Church of Scientology, and declares the Brotherhood to be “a threat to the people.”

Monday, those claiming to represent the international hacktivist collective released a YouTube video announcing an operation directed at the Muslim Brotherhood. According to the announcement, the Muslim Brotherhood is a “corrupt” organization “bent on taking over sovereign Arab states in its quest to seize power.” The announcement goes on to compare the Muslim Brotherhood to the Church of Scientology, and declares the Brotherhood to be “a threat to the people.”

Like any announcement from those claiming to represent Anonymous, there are no guarantees. The ultimate success or failure of any Anonymous operation is determined by the hive mind. Whether or not Anonymous manages to launch a successful operation against the Muslim Brotherhood remains to be seen.

Quantum Cloning Advances

Quantum cloning is the process that takes an arbitrary, unknown quantum state and makes an exact copy without altering the original state in any way. Quantum cloning is forbidden by the laws of quantum mechanics as shown by the no cloning theorem. Though perfect quantum cloning is not possible, it is possible to perform imperfect cloning, where the copies have a non-unit fidelity with the state being cloned.

The quantum cloning operation is the best way to make copies of quantum information therefore cloning is an important task in quantum information processing, especially in the context of quantum cryptography. Researchers are seeking ways to build quantum cloning machines, which work at the so called quantum limit. Quantum cloning is difficult because quantum mechanics laws only allow for an approximate copy—not an exact copy—of an original quantum state to be made, as measuring such a state prior to its cloning would alter it. The first cloning machine relied on stimulated emission to copy quantum information encoded into single photons.

Scientists in China have now produced a theory for a quantum cloning machine able to produce several copies of the state of a particle at atomic or sub-atomic scale, or quantum state. A team from Henan Universities in China, in collaboration with another team at the Institute of Physics of the Chinese Academy of Sciences, have produced a theory for a quantum cloning machine able to produce several copies of the state of a particle at atomic or sub-atomic scale, or quantum state. The advance could have implications for quantum information processing methods used, for example, in message encryption systems.

In this study, researchers have demonstrated that it is theoretically possible to create four approximate copies of an initial quantum state, in a process called asymmetric cloning. The authors have extended previous work that was limited to quantum cloning providing only two or three copies of the original state. One key challenge was that the quality of the approximate copy decreases as the number of copies increases.

The authors were able to optimize the quality of the cloned copies, thus yielding four good approximations of the initial quantum state. They have also demonstrated that their quantum cloning machine has the advantage of being universal and therefore is able to work with any quantum state, ranging from a photon to an atom. Asymmetric quantum cloning has applications in analyzing the security of messages encryption systems, based on shared secret quantum keys.

The quantum cloning operation is the best way to make copies of quantum information therefore cloning is an important task in quantum information processing, especially in the context of quantum cryptography. Researchers are seeking ways to build quantum cloning machines, which work at the so called quantum limit. Quantum cloning is difficult because quantum mechanics laws only allow for an approximate copy—not an exact copy—of an original quantum state to be made, as measuring such a state prior to its cloning would alter it. The first cloning machine relied on stimulated emission to copy quantum information encoded into single photons.

Scientists in China have now produced a theory for a quantum cloning machine able to produce several copies of the state of a particle at atomic or sub-atomic scale, or quantum state. A team from Henan Universities in China, in collaboration with another team at the Institute of Physics of the Chinese Academy of Sciences, have produced a theory for a quantum cloning machine able to produce several copies of the state of a particle at atomic or sub-atomic scale, or quantum state. The advance could have implications for quantum information processing methods used, for example, in message encryption systems.

In this study, researchers have demonstrated that it is theoretically possible to create four approximate copies of an initial quantum state, in a process called asymmetric cloning. The authors have extended previous work that was limited to quantum cloning providing only two or three copies of the original state. One key challenge was that the quality of the approximate copy decreases as the number of copies increases.

The authors were able to optimize the quality of the cloned copies, thus yielding four good approximations of the initial quantum state. They have also demonstrated that their quantum cloning machine has the advantage of being universal and therefore is able to work with any quantum state, ranging from a photon to an atom. Asymmetric quantum cloning has applications in analyzing the security of messages encryption systems, based on shared secret quantum keys.

Anonymous continues #OpDarkNet

Anonymous exposes 190 Internet pedophiles as part of the still ongoing Operation DarkNet.

Early this week, those claiming to represent the hacktivist collective known as Anonymous released the IP addresses of 190 alleged Internet pedophiles. According to them, the group planned and successfully executed a complex social engineering operation dubbed “Paw Printing.”

By tricking kiddie porn enthusiasts into downloading a phony security upgrade, the hacktivists were able to track and record the IP addresses of pedophiles visiting known child pornography sites like Lolita City and Hard Candy, hosted somewhere in the creepy world of the DarkNet.

The DarkNet is a mysterious and deliberately hidden part of the Internet where criminals, and others in need of anonymity and privacy mingle. Within the hidden world of this so called Invisible Web one might engage in myriad activities, legal and otherwise. On the DarkNet one might buy or sell drugs, obtain or sell fake IDs, sponsor terrorism, rent a botnet or trade in kiddie porn.

In their announcement Anonymous gives a detailed account of their month long child pornography sting which culminated in late October when, over a 24 hour period, Anonymous collected the 190 IP addresses associated with the alleged Internet pedophiles.

The hacktivists claim they are not out to destroy the DarkNet, only to expose pedophiles who use the anonymity and clandestine nature of that hidden part of the Web to exploit innocent children for perverse sexual gratification.

Early this week, those claiming to represent the hacktivist collective known as Anonymous released the IP addresses of 190 alleged Internet pedophiles. According to them, the group planned and successfully executed a complex social engineering operation dubbed “Paw Printing.”

By tricking kiddie porn enthusiasts into downloading a phony security upgrade, the hacktivists were able to track and record the IP addresses of pedophiles visiting known child pornography sites like Lolita City and Hard Candy, hosted somewhere in the creepy world of the DarkNet.

The DarkNet is a mysterious and deliberately hidden part of the Internet where criminals, and others in need of anonymity and privacy mingle. Within the hidden world of this so called Invisible Web one might engage in myriad activities, legal and otherwise. On the DarkNet one might buy or sell drugs, obtain or sell fake IDs, sponsor terrorism, rent a botnet or trade in kiddie porn.

In their announcement Anonymous gives a detailed account of their month long child pornography sting which culminated in late October when, over a 24 hour period, Anonymous collected the 190 IP addresses associated with the alleged Internet pedophiles.

|

| Anonymous executes Pedobear (the internet meme for pedophiles) |

The hacktivists claim they are not out to destroy the DarkNet, only to expose pedophiles who use the anonymity and clandestine nature of that hidden part of the Web to exploit innocent children for perverse sexual gratification.

Anonymous exposes pedophilia

Operation Darknet

Tuesday, after hacking into Lolita City, Anonymous exposed a large ring of Internet pedophiles.

Lolita City, a darknet website used by pedophiles to trade in child pornography, hosted something like 1,589 pedophiles trading in kiddie porn. A darknet website is a closed private network of computers used for file sharing, sometimes referred to as a hidden wiki. Darknet websites are part of the Invisible Web which is not indexed by standard search engines.

In Tuesday’s Pastebin release, Anonymous explained the technical side of how they were able to locate and identify Lolita City and access their user data base. In a prior Pastebin release, Anonymous offered a timeline of events detailing the discovery of the hidden cache of more than 100 gigabytes of child porn associated with Lolita City.

The following is a statement concerning Operation Darknet, and a list of demands released by Anonymous:

------------------------

Our Statement

------------------------

The owners and operators at Freedom Hosting are openly supporting child pornography and enabling pedophiles to view innocent children, fueling their issues and putting children at risk of abduction, molestation, rape, and death.

For this, Freedom Hosting has been declared #OpDarknet Enemy Number One.

By taking down Freedom Hosting, we are eliminating 40+ child pornography websites, among these is Lolita City, one of the largest child pornography websites to date containing more than 100GB of child pornography.

We will continue to not only crash Freedom Hosting's server, but any other server we find to contain, promote, or support child pornography.

------------------------

Our Demands

------------------------

Our demands are simple. Remove all child pornography content from your servers. Refuse to provide hosting services to any website dealing with child pornography. This statement is not just aimed at Freedom Hosting, but everyone on the internet. It does not matter who you are, if we find you to be hosting, promoting, or supporting child pornography, you will become a target.

The Invisible Web

The Invisble Web (also called Deepnet, the Deep Web, DarkNet, Undernet, or the hidden Web) refers to World Wide Web content that is not part of the Surface Web, which is indexed by standard search engines. Some have said that searching on the Internet today can be compared to dragging a net across the surface of the ocean: a great deal may be caught in the net, but there is a wealth of information that is deep and therefore missed.

Most of the Web's information is buried far down on dynamically generated sites, and standard search engines do not find it. Traditional search engines cannot find or retrieve content in the deep Web – those pages do not exist until they are created dynamically as the result of a specific search. The deep Web is several orders of magnitude larger than the surface Web.

To discover content on the Web, search engines use web crawlers that follow hyperlinks. This technique is ideal for discovering resources on the surface Web but is often ineffective at finding Invisible Web resources. For example, these crawlers do not attempt to find dynamic pages that are the result of database queries due to the infinite number of queries that are possible.

Anonymous Defense

Jay Leiderman, a lawyer representing Christopher Doyon, alleged Anonymous hacktivist Commander X, argues that a Distributed Denial of Service (DDoS) attack is not a crime, but a form of legal protest, a digital sit-in, and protected speech.

Wednesday, Leiderman, speaking to TPM, said: There’s no such thing as a DDoS attack. A DDoS is a protest; it’s a digital sit-it. It is no different than physically occupying a space. It’s not a crime, it’s speech. Nothing was malicious, there was no malware, no Trojans. This was merely a digital sit-in.

A Distributed Denial of Service (DDoS) attack is a planned attempt to make a computer resource unavailable to its intended users. Multiple users send repeated requests to a website, thus flooding the servers.

It is important to distinguish between a voluntary DDoS attack, the type of attack Anonymous is known for, and the involuntary DDoS attack which involves using “botnets” made up of slave computers infected with malware, all done without the knowledge or permission of the computer’s owner.

The voluntary type of DDoS involve a high volume of users all voluntarily participating, requesting information from the same targeted server simultaneously, and thus making the selected computer resource unavailable to intended users, just as a sit-in blocks access to a particular site or resource.

There are several similar, Anonymous related cases headed for court across the US and other contries. Whether or not a judge and jury will accept the sit-in defense remains to be seen. Yet one thing seems certain, charging those who in good conscience participated in such a relatively harmless cyber protest like a voluntary DDoS attack should not be subject to felony charges.

It's an interesting defense, and it might get tricky to prove that there is something illegal about in a lot of people doing something legal (accessing a web site) and not causing any demonstrable harm...

On the other hand, prosecutors can claim that these attacks caused serious hampering of the business flow causing serious financial damages in the process.

Let's wait and see what happens...

Harvard University website hacked

Harvard University website attacked by Syrian hackers

Syrian hackers have hit the website of Harvard University, one of America’s most prestigious universities. The hacked home page showed a message saying the "Syrian Electronic Army Were Here" along with a picture of Syrian president, Bashar al-Assad.

A Harvard spokesman stated that "The University's homepage was compromised by an outside party this morning. We took down the site for several hours in order to restore it. The attack appears to have been the work of a sophisticated individual or group".

The hackers also criticized US policy towards President Assad`s regime and wrote several threats to the US. The new design stayed online on the website for nearly an hour.

LulzSec suspected members arrested

The FBI has recently arrested two alleged members of the hacking group LulzSec and Anonymous in Phoenix and San Francisco.

The person arrested in Arizona is a student at a technical university and allegedly participated in the widely publicized hack against Sony.

The suspected hacker arrested in California, who is reported to be homeless, and alleged to have been involved in the hacking of Santa Cruz County government websites. Just because a man is homeless, of course, doesn't mean that he can't get an internet connection. Coffee houses, cafes, libraries, etc can all offer cheap or free internet access - and because the computer being used can be a shared device, it may be harder to identify who might have been responsible for an attack compared to a PC at a home.

These arrests shouldn't surprise anyone because they made two fundamental mistakes: They brought too much attention to themselves and they didn't cover up their tracks.

The logs maintained by HideMyAss.com, in addition to other evidence, has led to the arrest of Cody Kretsinger, 23, from Phoenix, who allegedly used this anonymity service during his role in the attack on Sony Pictures.

According to HideMyAss.com, “…services such as ours do not exist to hide people from illegal activity. We will cooperate with law enforcement agencies if it has become evident that your account has been used for illegal activities.” The service stores logs for 30-days when it comes to Website proxy services, and they store the connecting IP address, as well as time stamps for those using the VPN offerings.

The FBI believes that the homeless man they arrested was "Commander X", a member of the People's Liberation Front (PLF) associated with Anonymous hacktivism. He faces a maximum sentence of 15 years in prison if convicted.

The Future of Computers - Artificial Intelligence

What is Artificial Intelligence?

The term “Artificial Intelligence” was coined in 1956 by John McCarthy at the Massachusetts Institute of Technology defining it as the science and engineering of making intelligent machines.

Nowadays it’s a branch of computer science that aims to make computers behave like humans and this field of research is defined as the study and design of intelligent agents where an intelligent agent is a system that perceives its environment and takes actions that maximize its chances of success.

This new science was founded on the claim that a central property of humans, intelligence—the sapience of Homo Sapiens—can be so precisely described that it can be simulated by a machine. This raises philosophical issues about the nature of the mind and the ethics of creating artificial beings, issues which have been addressed by myth, fiction and philosophy since antiquity.

Artificial Intelligence includes programming computers to make decisions in real life situations (e.g. some of these “expert systems” help physicians in the diagnosis of diseases based on symptoms), programming computers to understand human languages (natural language), programming computers to play games such as chess and checkers (games playing), programming computers to hear, see and react to other sensory stimuli(robotics) and designing systems that mimic human intelligence by attempting to reproduce the types of physical connections between neurons in the human brain (neural networks).

Nowadays it’s a branch of computer science that aims to make computers behave like humans and this field of research is defined as the study and design of intelligent agents where an intelligent agent is a system that perceives its environment and takes actions that maximize its chances of success.

This new science was founded on the claim that a central property of humans, intelligence—the sapience of Homo Sapiens—can be so precisely described that it can be simulated by a machine. This raises philosophical issues about the nature of the mind and the ethics of creating artificial beings, issues which have been addressed by myth, fiction and philosophy since antiquity.

Artificial Intelligence includes programming computers to make decisions in real life situations (e.g. some of these “expert systems” help physicians in the diagnosis of diseases based on symptoms), programming computers to understand human languages (natural language), programming computers to play games such as chess and checkers (games playing), programming computers to hear, see and react to other sensory stimuli(robotics) and designing systems that mimic human intelligence by attempting to reproduce the types of physical connections between neurons in the human brain (neural networks).

History of Artificial Intelligence

The Greek myth of Pygmalion is the story of a statue brought to life for the love of her sculptor. The Greek god Hephaestus' robot Talos guarded Crete from attackers, running the circumference of the island 3 times a day. The Greek Oracle at Delphi was history's first chatbot and expert system.

In the 3rd century BC, Chinese engineer Mo Ti created mechanical birds, dragons, and warriors. Technology was being used to transform myth into reality.

Much later, the Royal courts of Enlightenment-age Europe were endlessly amused by mechanical ducks and humanoid figures, crafted by clockmakers. It has long been possible to make machines that looked and moved in human-like ways - machines that could spook and awe the audience - but creating a model of the mind was off limits.

However, writers and artists were not bound by the limits of science in exploring extra-human intelligence, and the Jewish myth of the Golem, Mary Shelley's Frankenstein, all the way through to Forbidden Planet's Robbie the Robot and 2001's HAL9000, gave us new - and troubling - versions of the manufactured humanoid.

In the 1600s, Engineering and Philosophy began a slow merger which continues today and from that union the first mechanical calculator is born at a time when the world's philosophers were seeking to encode the laws of human thought into complex, logical systems.

The mathematician, Blaise Pascal, created a mechanical calculator in 1642 (to enable gambling predictions). Another mathematician, Gottfried Wilhelm von Leibniz, improved Pascal's machine and made his own contribution to the philosophy of reasoning by proposing a calculus of thought.

Many of the leading thinkers of the 18th and 19th century were convinced that a formal reasoning system, based on a kind of mathematics, could encode all human thought and be used to solve every sort of problem. Thomas Jefferson, for example, was sure that such a system existed, and only needed to be discovered. The idea still has currency - the history of recent artificial intelligence is replete with stories of systems that seek to "axiomatize" logic inside computers.

From 1800 on, the philosophy of reason picked up speed. George Boole proposed a system of "laws of thought," Boolean Logic, which uses "AND" and "OR" and "NOT" mto establish how ideas and objects relate to each other. Nowadays most Internet search engines use Boolean logic in their searches.

Recent history and the future of Artificial Intelligence

In the early part of the 20th century, multidisciplinary interests began to converge and engineers began to view brain synapses as mechanistic constructs. A new word, cybernetics, i.e., the study of communication and control in biological and mechanical systems, became part of our colloquial language. Claude Shannon pioneered a theory of information, explaining how information was created and how it might be encoded and compressed.

Enter the computer. Modern artificial intelligence (albeit not so named until later) was born in the first half of the 20th century, when the electronic computer came into being. The computer's memory was a purely symbolic landscape, and the perfect place to bring together the philosophy and the engineering of the last 2000 years. The pioneer of this synthesis was the British logician and computer scientist Alan Turing.

AI research is highly technical and specialized, deeply divided into subfields that often fail to communicate with each other. Subfields have grown up around particular institutions, the work of individual researchers, the solution of specific problems, longstanding differences of opinion about how AI should be done and the application of widely differing tools. The central problems of AI include such traits as reasoning, knowledge, planning, learning, communication, perception and the ability to move and manipulate objects.

Natural-language processing would allow ordinary people who don’t have any knowledge of programming languages to interact with computers. So what does the future of computer technology look like after these developments? Through nanotechnology, computing devices are becoming progressively smaller and more powerful. Everyday devices with embedded technology and connectivity are becoming a reality. Nanotechnology has led to the creation of increasingly smaller and faster computers that can be embedded into small devices.

This has led to the idea of pervasive computing which aims to integrate software and hardware into all man made and some natural products. It is predicted that almost any items such as clothing, tools, appliances, cars, homes, coffee mugs and the human body will be imbedded with chips that will connect the device to an infinite network of other devices.

Hence, in the future network technologies will be combined with wireless computing, voice recognition, Internet capability and artificial intelligence with an aim to create an environment where the connectivity of devices is embedded in such a way that the connectivity is not inconvenient or outwardly visible and is always available. In this way, computer technology will saturate almost every facet of our life. What seems like virtual reality at the moment will become the human reality in the future of computer technology.

The Future of Computers - Optical Computers

An optical computer (also called a photonic computer) is a device that performs its computation using photons of visible light or infrared (IR) beams, rather than electrons in an electric current. The computers we use today use transistors and semiconductors to control electricity but computers of the future may utilize crystals and metamaterials to control light.

An electric current creates heat in computer systems and as the processing speed increases, so does the amount of electricity required; this extra heat is extremely damaging to the hardware. Photons, however, create substantially less amounts of heat than electrons, on a given size scale, thus the development of more powerful processing systems becomes possible. By applying some of the advantages of visible and/or IR networks at the device and component scale, a computer might someday be developed that can perform operations significantly faster than a conventional electronic computer.

Coherent light beams, unlike electric currents in metal conductors, pass through each other without interfering; electrons repel each other, while photons do not. For this reason, signals over copper wires degrade rapidly while fiber optic cables do not have this problem. Several laser beams can be transmitted in such a way that their paths intersect, with little or no interference among them - even when they are confined essentially to two dimensions.

Electro-Optical Hybrid computers

Most research projects focus on replacing current computer components with optical equivalents, resulting in an optical digital computer system processing binary data. This approach appears to offer the best short-term prospects for commercial optical computing, since optical components could be integrated into traditional computers to produce an optical/electronic hybrid. However, optoelectronic devices lose about 30% of their energy converting electrons into photons and back and this switching process slows down transmission of messages.

Pure Optical Computers

All-optical computers eliminate the need for switching. These computers will use multiple frequencies to send information throughout computer as light waves and packets thus not having any electron based systems and needing no conversation from electrical to optical, greatly increasing the speed.

The Future of Computers - Quantum nanocomputers

A quantum computer uses quantum mechanical phenomena, such as entanglement and superposition to process data. Quantum computation aims to use the quantum properties of particles to represent and structure data using quantum mechanics to understand how to perform operations with this data.

The quantum mechanical properties of atoms or nuclei allow these particles to work together as quantum bits, or qubits. These qubits work together to form the computer’s processor and memory and can can interact with each other while being isolated from the external environment and this enables them to perform certain calculations much faster than conventional computers. By computing many different numbers simultaneously and then interfering the results to get a single answer, a quantum computer can perform a large number of operations in parallel and ends up being much more powerful than a digital computer of the same size.

I promise to write a series of articles on quantum issues very shortly...

The Future of Computers - Mechanical nanocomputers

Pioneers as Eric Drexler proposed as far back as the mid-1980's that nanoscale mechanical computers could be built via molecular manufacturing through a process of mechanical positioning of atoms or molecular building blocks one atom or molecule at a time, a process known as “mechanosynthesis.”

Once assembled, the mechanical nanocomputer, other than being greatly scaled down in size, would operate much like a complex, programmable version of the mechanical calculators used during the 1940s to 1970s, preceding the introduction of widely available, inexpensive solid-state electronic calculators.

Drexler's theoretical design used rods sliding in housings, with bumps that would interfere with the motion of other rods. It was a mechanical nanocomputer that uses tiny mobile components called nanogears to encode information.

Drexler and his collaborators favored designs that resemble a miniature Charles Babbage's analytical engines of the 19th century, mechanical nanocomputers that would calculate using moving molecular-scale rods and rotating molecular-scale wheels, spinning on shafts and bearings.

For this reason, mechanical nanocomputer technology has sparked controversy and some researchers even consider it unworkable. All the problems inherent in Babbage's apparatus, according to the naysayers, are magnified a million fold in a mechanical nanocomputer. Nevertheless, some futurists are optimistic about the technology, and have even proposed the evolution of nanorobots that could operate, or be controlled by, mechanical nanocomputers.

The Future of Computers - Chemical and Biochemical nanocomputers

Chemical Nanocomputer

In general terms a chemical computer is one that processes information in by making and breaking chemical bonds and it stores logic states or information in the resulting chemical (i.e., molecular) structures. In a chemical nanocomputer computing is based on chemical reactions (bond breaking and forming) and the inputs are encoded in the molecular structure of the reactants and outputs can be extracted from the structure of the products meaning that in these computers the interaction between different chemicals and their structures is used to store and process information.

These computing operations would be performed selectively among molecules taken just a few at a time in volumes only a few nanometers on a side so, in order to create a chemical nanocomputer, engineers need to be able to control individual atoms and molecules so that these atoms and molecules can be made to perform controllable calculations and data storage tasks. The development of a true chemical nanocomputer will likely proceed along lines similar to genetic engineering.

Biochemical nanocomputers

Both chemical and biochemical nanocomputers would store and process information in terms of chemical structures and interactions. Proponents of biochemically based computers can point to an “existence proof” for them in the commonplace activities of humans and other animals with multicellular nervous systems. So biochemical nanocomputers already exist in nature; they are manifest in all living things. But these systems are largely uncontrollable by humans and that makes artificial fabrication or implementation of this category of “natural” biochemically based computers seems far off because the mechanisms for animal brains and nervous systems still are poorly understood. We cannot, for example, program a tree to calculate the digits of pi , or program an antibody to fight a particular disease (although medical science has come close to this ideal in the formulation of vaccines, antibiotics, and antiviral medications)..

DNA nanocomputer

In 1994, Leonard Adelman took a giant step towards a different kind of chemical or artificial biochemical computer when he used fragments of DNA to compute the solution to a complex graph theory problem.

Using the tools of biochemistry, Adleman was able to extract the correct answer to the graph theory problem out of the many random paths represented by the product DNA strands. Like a computer with many processors, this type of DNA computer is able to consider many solutions to a problem simultaneously. Moreover, the DNA strands employed in such a calculation (approximately 1017) are many orders of magnitude greater in number and more densely packed than the processors in today's most massively parallel electronic supercomputer. As a result of the Adleman work, the chemical nanocomputer is the only one of the aforementioned four types to have been demonstrated for an actual calculation.

Subscribe to:

Posts (Atom)